Is Chromium Omaha Updater Bringing Your Site or Service Down

Published September 19, 2025

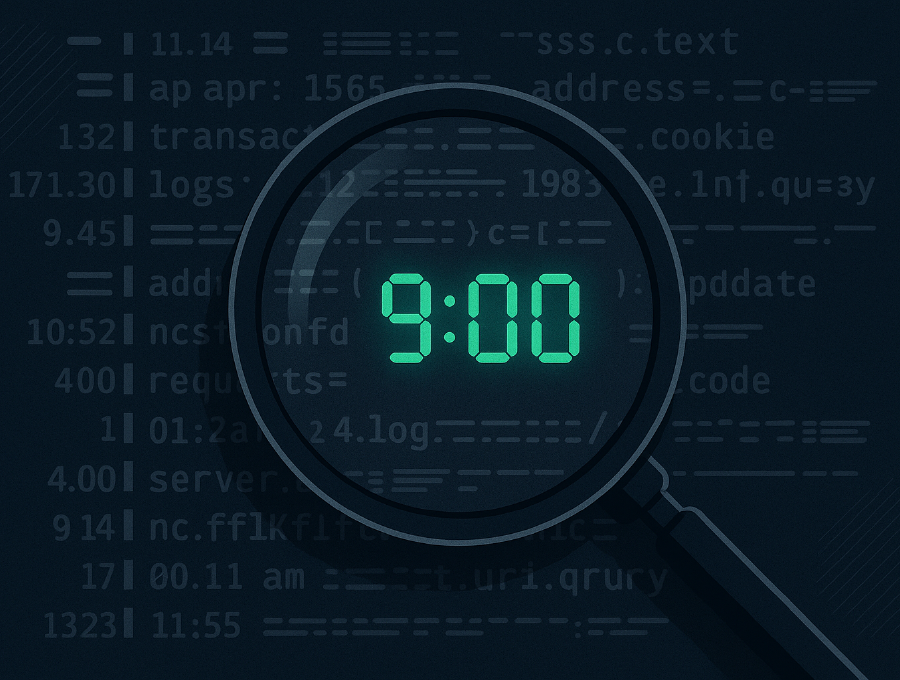

For the last two weeks or so, every day at 9:00 a.m. one of the major UK National Health Service ‘NHS’ websites, I work on, has been getting hit by something strange and was on the brink each time. The regularity of 9:00 a.m. was suspicious.

What we found turned into a little detective story, one that might be useful for others dealing with this same mysterious performance drop.

The Symptom

- Every day at 9 a.m., performance degraded significantly. Our peak degradation was 9:05 a.m.

- Logs pointed to bursts of requests that didn’t fit the normal user profile.

- The traffic wasn’t obviously malicious, but it wasn’t normal browsing either. And it was not A.I.

The Investigation

After combing through logs (myself, our team’s fellow developers, and our 3rd party Cloud Provider), we found requests initiated by the Chromium Omaha updater. For context, the Omaha updater is part of Chromium’s background update mechanism. In our case, though, it was firing requests in a way that directly impacted our service.

We found very limited/no public documentation on this exact behaviour, which made it all the more puzzling.

The Fix

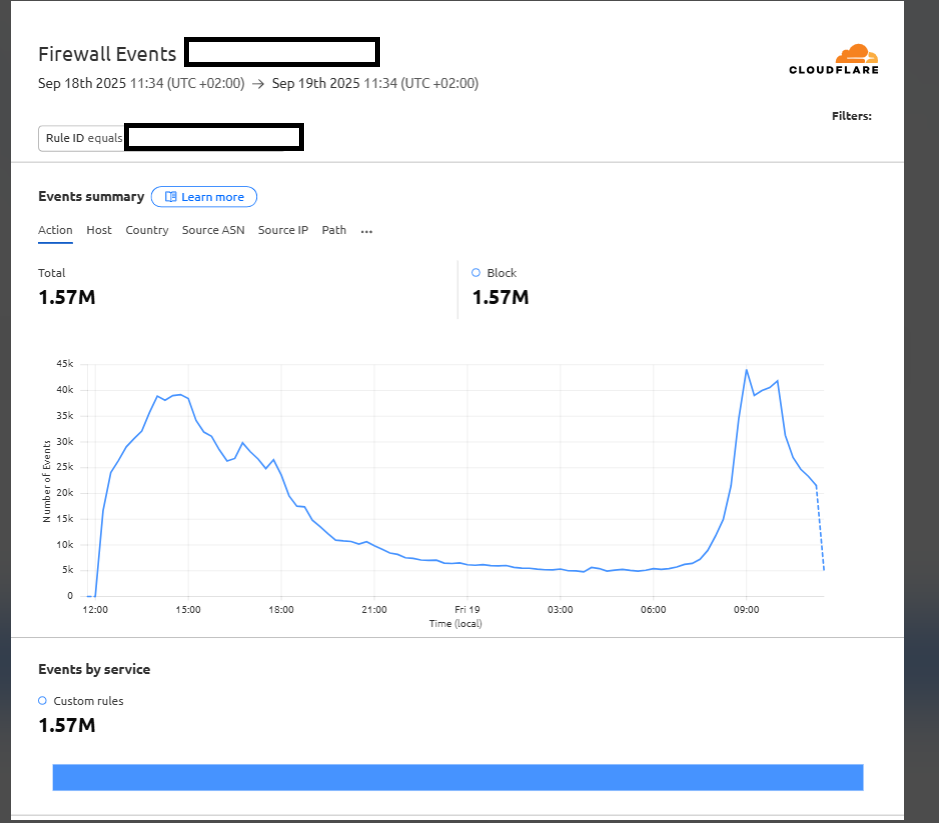

Mitigation involved blocking these updater requests. Cloudflare gave us the tools to do this efficiently, using request filtering rules without disrupting genuine user traffic.

The exact rule we tested looked something like this:

(http.host eq "our.domain" and http.request.uri.query contains "ncgfdaipgceflkflfffaejlnjplhnbfn")

Breaking this down:

http.host eq "our.domain"

Ensures the filter only applies to requests targeting our own domain. That way, nothing unrelated is caught.http.request.uri.query contains "ncgfdaipgceflkflfffaejlnjplhnbfn"

Matches requests where the query string includes the long, seemingly random identifier used by the Chromium Omaha updater. This signature was consistent across all the suspicious requests we saw in the logs.

Together, these two checks mean only the unwanted updater traffic is blocked, while normal web users are completely unaffected.

This rule stopped the spurious requests from ever hitting the origin. To our suprise, within ~24 hours, the filter has blocked 1.57 million requests, with the largest surge occurring around 9:00-9:05 a.m.

Was This a DDoS Attack? A Novel DDoS vector!

One unanswered question is why our domain was being queried in the first place.

The Chromium Omaha updater is designed to check legitimate update servers — not arbitrary domains like ours. The fact that 1.57 million extra requests hit our infrastructure in just 24 hours, daily for two weeks, suggests one of two possibilities:

- Misconfiguration – somewhere, a system has been incorrectly set up to use our domain as its update endpoint.

- Abuse – someone has intentionally pointed a large number of updaters at our domain.

We haven’t found confirmation either way yet. But, in both cases, the result is the same: an unintended denial-of-service risk. While this didn’t resemble a classic volumetric DDoS attack, the sheer persistence of the requests was enough to disrupt performance.

Why It Matters

- If accidental, it highlights how misconfigurations elsewhere on the internet can have real consequences for unrelated domains.

- If deliberate, it suggests a novel vector for low-level DDoS attacks: convincing large fleets of software to call home to the wrong server.

Either way, the lesson is clear: monitoring, logging, and quick action at the edge are crucial.

Lessons Learned

- Updater traffic can be disruptive – even well-intentioned software can strain systems if misconfigured or overly persistent.

- Document and share (well we know that) – we couldn’t find others talking about this exact behaviour, so writing it up might help someone else spot the same issue sooner. I am of course making sure our NHS Cyber Security team know about this, I am guessing they are lickly to make an offial publication.

Conclusion

Not all outages come from malicious actors or obvious misconfigurations. Sometimes, it’s something as mundane as an updater knocking on the wrong door at the wrong time.

If you’ve seen similar requests from Chromium’s Omaha updater, it’s worth checking how they’re routed and whether filtering at the edge can protect your platform.